For years vendors and integrators have been sizing CPU or compute resources for new computer environments by licking their index finger and feeling the wind. Not exactly accurate is it? I have been involved with hundreds, if not close to thousands, of designs over the years. I have seen the big boys come in and say "How many CPUs do you have today? And how many do you need tomorrow?" Expecting the customer to know everything about the new CPUs that vendors are selling and how to size them. I have seen vendors come in and ask "how many systems do you have today?" and then multiply by 2 and give that many CPUs. I have even had vendors come in to my shops and ask "How many cores do you have today?" and then divide by 2, because the new CPUs are 50% better. What?

But, better than what? If you noticed they never asked what CPUs we were running today, they never asked what kind of workload (besides "is it physical or virtual"), they never asked about application requirements, they never looked at current load, they never had any real numbers. Then what the heck were they doing?

Over the years the main way to size new CPUs for environments was to just match the same number of cores, then add 15-20% for growth. Have 500 cores today? You probably need 600 tomorrow. But a couple things were going on that no one really noticed:

- Intel and AMD were continually fighting to best one another, with Intel really pulling ahead (yea yea, this is an opinion, but who is the market leader hm?)

- Intel has continually released chipsets with more and more cores, and from the early 2000's with faster and faster frequencies. Though today we seem to have hit the frequency maximum with current technology

- Instead of focusing on frequency they have now focused on cores and efficiency

- In the early days of system virtualization (2006-2010), physical systems were typically CPU bound, so with every refresh people bought more and more cores

- Around the time Nehalhem CPUs were released, 2008 - 2009, was when environments stopped being CPU bound and started being main memory or storage performance bound

- People did not really notice and they still kept buying more and more CPU each time, and now after 4-6 refresh cycles since then they have environments with massive amounts of compute resources that are sitting at only 5-10% in use, effectively saying 95% of the time the CPU is doing nothing

So what to do? WHAT TO DO?

Never fear, like all good things in computing there was a solution invented in the 1980's: the Standard Performance Evaluation Corporation was founded for that exact purpose. You can go to SPEC's website to learn more if you are interested. The gist of it is some really smart people realized that there needed to be standard way to measure CPU performance, so they have developed several different tools as a standard way to measure performance of compute devices. One of the main tools is called SPECint_rate2006, which runs some compute heavy integer math workloads across all CPUs and cores to give you a pretty decent look at what performance differences there are between different processors.

SPEC is kind enough to publish these results here but they are not always the easiest to read and if you are trying to compare multiple systems quickly it can start to become frustrating.

Well, at least I was getting frustrated. I do anywhere from 1-10 sizings a week, if not more when teammates need help. With Intel's latest generation coming available in vendor hardware I was having to compare Broadwell with Skylake, Skylake with Skylake, and all other sorts of really old systems that make you both laugh and cry, with Skylake.

You can find my tool on its own page, ewams.net/specintd.

It includes instructions, and I hope it is mostly self explanatory to people that do this sort of thing but in case you are wondering what is this SPECint thing, how do I use it, what does it mean to me, and did I accidentally the gigahertz? hopefully this post will help.

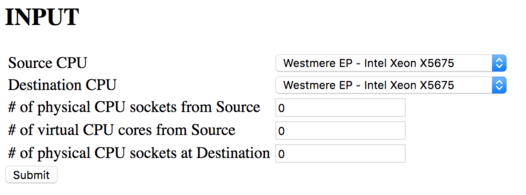

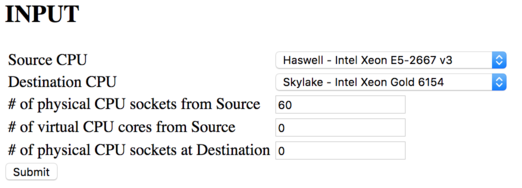

- Step 1 - Go to ewams.net/specintd/

- You can read the instructions and information provided

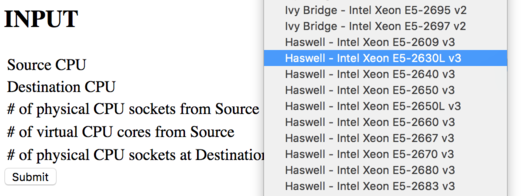

- In the first dropdown, titled "Source CPU", select the processors that you are currently running in your environment. This is literally what you have today.

For example, if your boxes are running Haswell E5-2667v3's then select those. If you are not sure of what generation you are running but know the model number you have is the E5-2667v3, I have ordered them in chronology order of generation, so look at the model numbers and select the correct one. This means v0 comes first, then v1, then v2, then v3, etc.

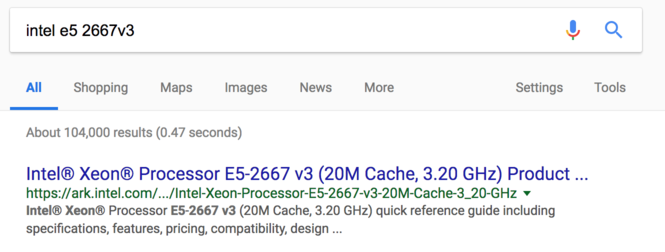

But, be aware that Intel has changed their naming scheme every couple of generations, so sometimes you just have to know. If you are really lost you can go to your favorite search engine and type in your model number and one of the first few links should be from ark.intel.com and at the top of the page it will tell you the "codename" which might help narrow your search in my dropdown.

If you have multiple CPUs in your environment you will have to do this process a few times, probably make a spreadsheet, and do some funky math. I can't do everything for you.

- Now do the same thing but for the "Destination CPU" field. This is the CPU you would like your new environment to run on. If you are not sure which CPU you want to use, thats fine, just select one in the latest generation and you can always change it later. The tool saves your input when you submit so it is easy to compare results from different processors.

- The next part is optional, but can help you with your sizing a great deal. In the current environment count the number of CPU Sockets you have and put that number in the "# of physical CPU sockets from Source" field. Sockets is NOT the number of cores, not the number of servers. The number of sockets, which can also be called the number of physical CPUs. If you have a dual-way, or dual-socket server, that means you have 2 sockets. If you have a virtualization environment with 15 hosts that each have 4 sockets, that means you have 60 sockets. If you have no idea how many sockets you have, reach out to your hardware vendor that sold you the equipment and ask. If you still can not figure it out you can email me $500 and I'll walk you through the process of counting sockets.

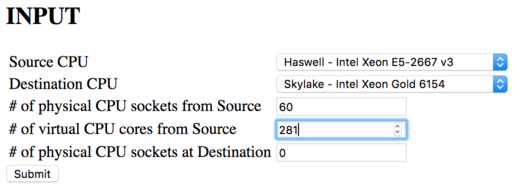

- Also optional, if you are running a virtualized environment then you will want to count the number of virtual CPU cores assigned to powered-on VMs and put that number in the "# of virtual CPU cores from Source" field. If you are running VMware there is a tool called rvtools which can connect to your ESXi or vCenter and get this information for you. (PS - it can also tell you number of sockets and model number at the host level). If you are running a physical environment then leave the field at 0.

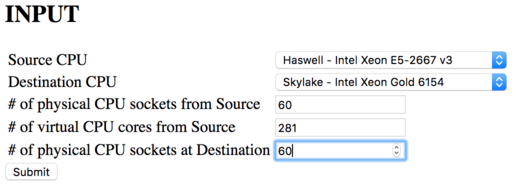

- And again, optional, you can input the number of sockets (again, this means number of physical CPUs, NOT the number of cores) you want to have in the new environment in the "# of physical CPU sockets at Destination" field. But wait a second, how do you know how many sockets you want or need? Well, you can start off putting the same number as you put in the source sockets field and then use the feedback the tool provides to right-size. Or you can think logically about things like space, weight, cooling, licensing, money, etc and makes some guesses there.

- Hit that fancy submit button.

- Now the page refreshes and shows all sorts of information. Lets take a look at what it displays and go through it:

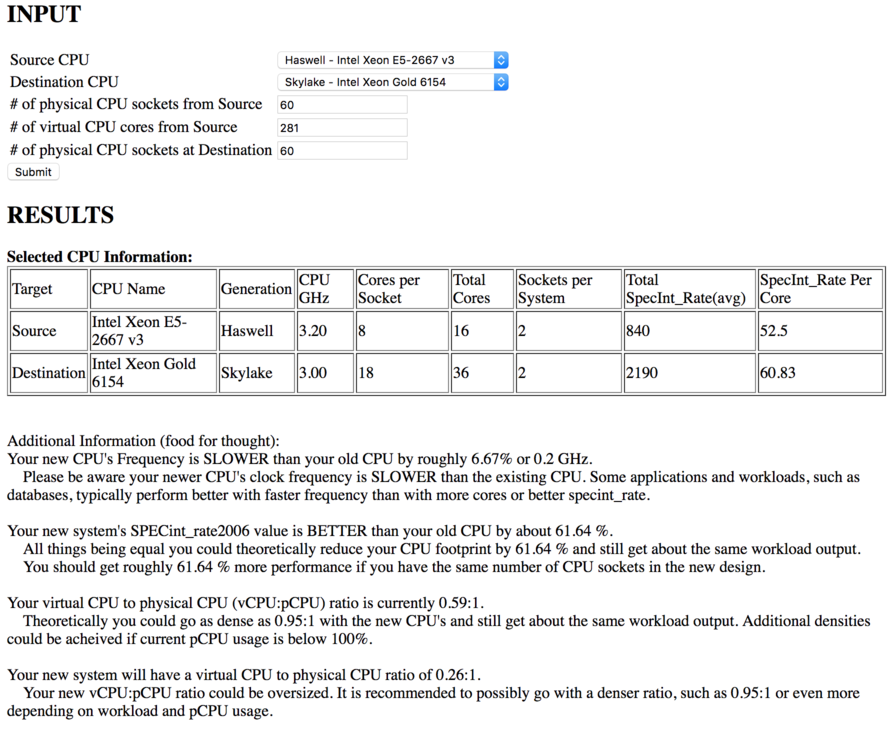

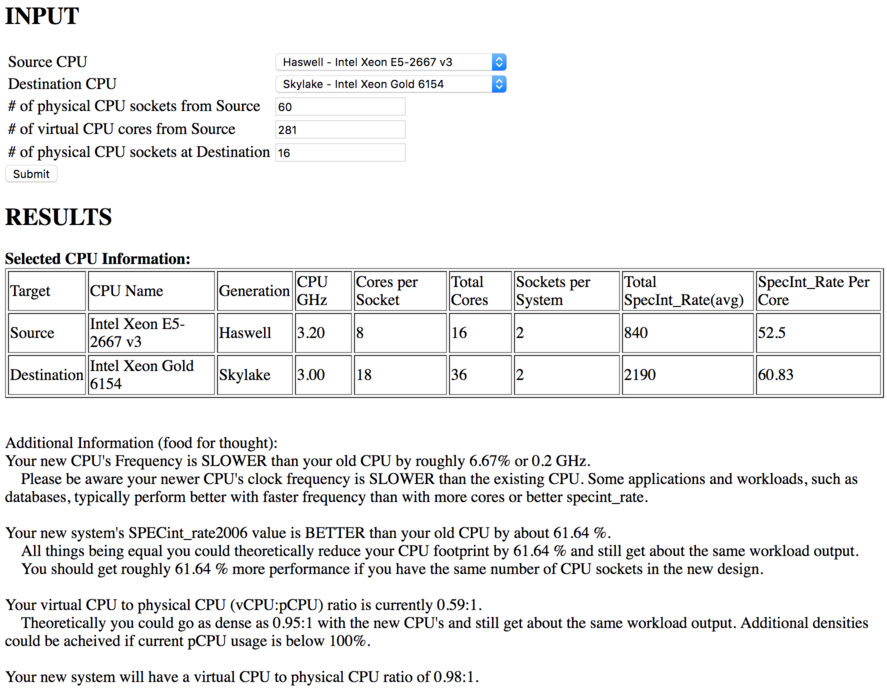

The first bit of info is a table comparing the selected CPUs, their model name, generation codename, frequency in GHz, cores per CPU, total cores per system, number of sockets per system, total SPECint_rate2006 value, and their SPECint_rate2006 value broken down per core.

This table lets you directly compare the two CPUs. Below that the tool will spit out information to assist with the comparison and things to look out for.

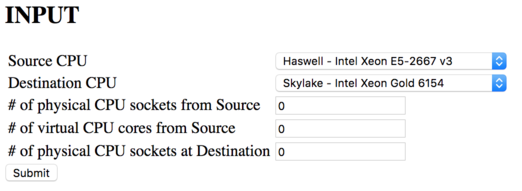

In this example it shows that the original CPU's frequency is faster than the new ones, but the new CPUs are 61% better from a SPECint_rate value. The SPECint_rate value is per system with the number of sockets identified. Our example is with a dual socket system, so the 840 SPECint_rate value is for two CPUs of the E5-2667v3. The Skylake Gold 6154 has a rate of 2190.

The next two sections will compare the virtual CPU (vCPU) to physical CPU (pCPU) ratios. This is typically used only in virtualization environments to understand how dense you can get VMs on your physical hosts. This theoretical example has a source system ratio of .59:1. Thats because there are 60 sockets times 8 cores per socket, 480 total cores. With 281 virtual cores on the environment, its not even a 1:1 ratio, this environment is severely underutilized. But take a look at that new environment - even more underutilized since it has 18 cores per socket and the same number of sockets. You'll notice the tool gives some recommendations on target density,

And that's it! Just play around with the tool and you can do tons of different things. The following are just a few examples of what it can help you with.

But here are some more examples:

Example 2:

Based on the first sizing you can change things like destination CPU, number of sockets at destination, etc. As an example, I changed the number of sockets to 16, down from 60, for the destination CPU but kept everything else the same. After hitting submit you can see pretty much all of the information is the same except that last part about vCPU:pCPU. I did this just so you could see the differences and how the results will change.

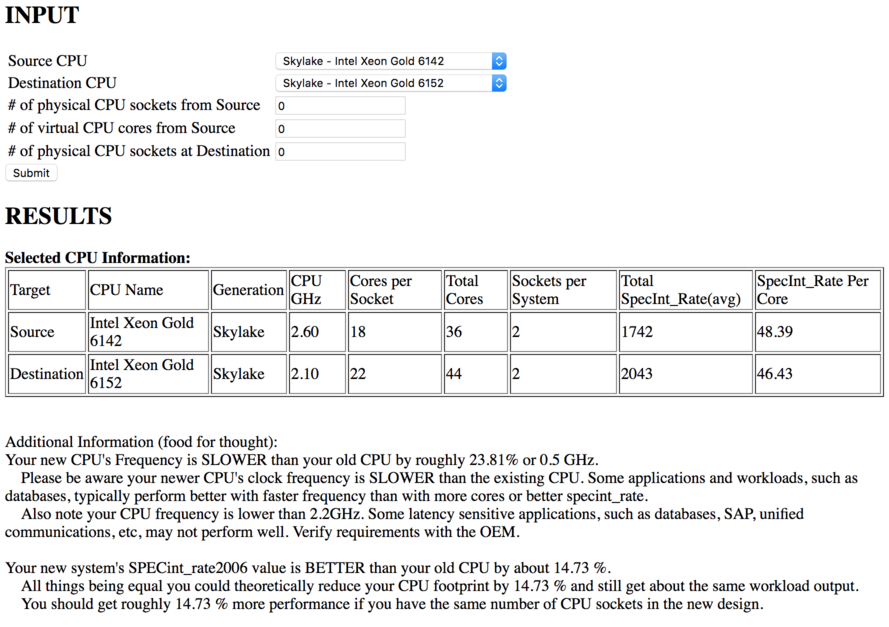

Example 3:

Sometimes you just need to know the stats or differences between different CPUs. Even if they are the same generation. As the Intel Skylakes are still fairly new you may not know the differences between the bronze, silver, gold, or platinum editions, or even within the different editions. You can use the tool to compare two like processors, for example the Gold 6142 and the Gold 6152 to see the differences. When just comparing two CPUs you do not need to input any data into the socket and core count fields. You can see the results below.

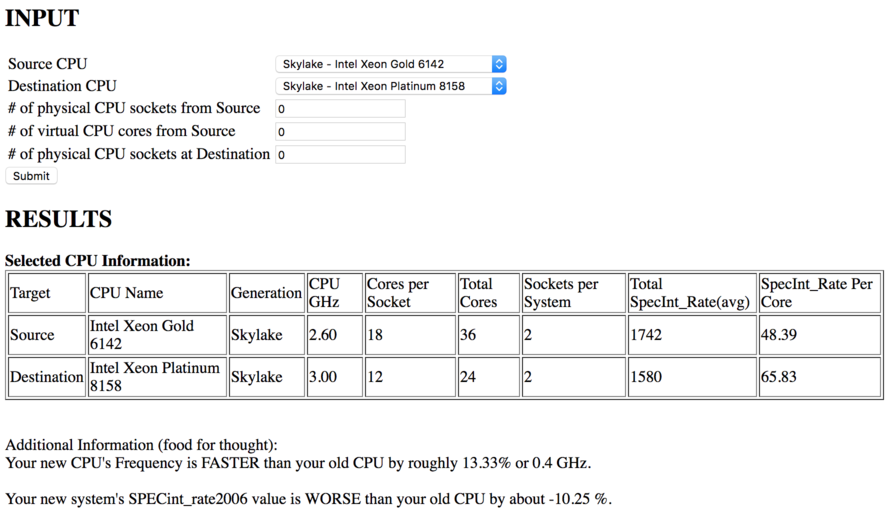

Example 4:

Sometimes the results don't really make sense at first. For example if you compare the Gold 6142 to the Platinum 8158, surprisingly the 8158 is actually worse from a SPECint_Rate standpoint. This is primarily because it has 6 fewer cores per socket so there is less work it can do. But if you compare the SPECint_rate per core you can see the 8158 really shines. This may indicate if you have a single threaded application such as a database engine, or services that have a low number of threads they would probably perform better on the 8158 vs the 6142. But the 6142 might be better if you are trying to do a big virtualization environment and looking to get dense ratios, or for heavy concurrency type applications etc etc.

Example 5:

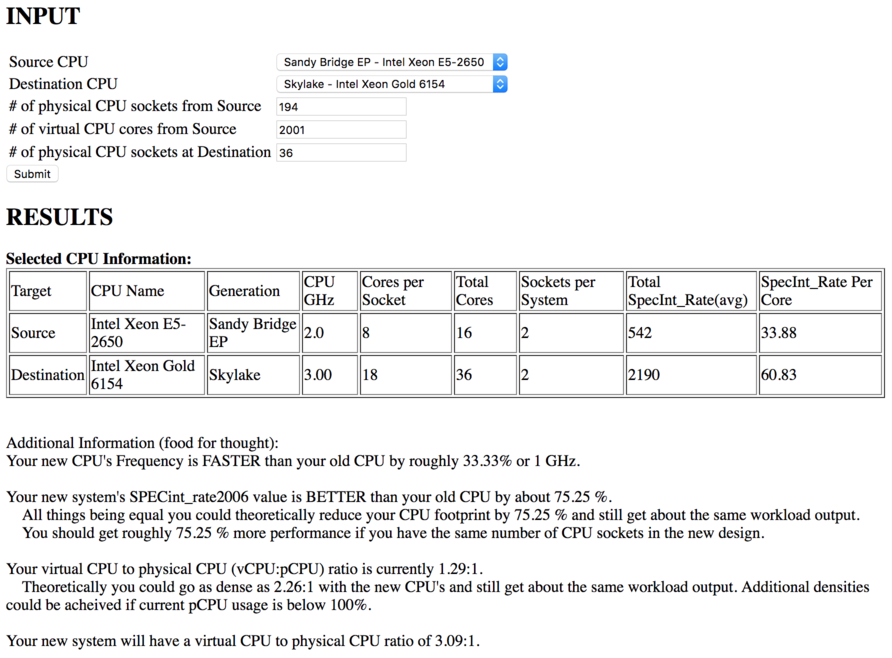

This last example is actually a real scenario I recently did for a customer. Their source environment has a mixture of dual and quad socket systems with the Intel Xeon E5-2650, which is from the Sandy Bridge generation. The workload was 100% SQL Server. They approached me with the problem of designing an environment that gave the same or better performance while at the same time reducing their SQL Server licensing costs. Their licensing scheme was per core and just because of several circumstances they had to pay licensing for 2001 cores. Holy cow!

Database workloads typically perform better with faster frequencies than with more cores. Though SQL is able to take advantage of more cores when they are available. By using this tool I saw their existing systems were running at 2.0GHz, which is actually pretty slow in the database world. I played around with this tool for a while and was looking at some of the Platinum Skylake CPUs but could not find any of them that seemed to work for a good price / performance ratio. After looking at the Gold editions I selected the Gold 6154 and it seemed like a match made in heaven.

Take a look at the result below. Pay attention to how the tool points out frequency improvement, overall SPECint_rate improvement, and suggestions on vCPU:pCPU ratios. Another helpful part is in the table, since Sandy Bridge and Skylake are several generations apart (and the frequency change), the 6154 has almost 50% better performance from a single core than the E5-2650! All things being equal that means we could right away get a 50% performance boost. But overall the 6154 is about 75% better than the Sandy Bridge, so we could SHRINK their environment by 75% and still get the same workload output. Guess what we did for this customer? 75% reduction, with the ROI being about 1 month because that's how long it took to migrate all of the databases over to the new environment. If the sizing was done the old way, like core for core or socket for socket they would have been in bad shape.

Conclusion

There are a ton of factors to include when sizing computing environments; workload, politics, financials, CPU, RAM, storage, networking, power, cooling, space, etc etc. Using this tool you can at least get a better idea of how to size using just the CPUs by looking at frequency, core count, and SPEC's SPECint_2006rate. Just don't forget a tool is there to help get the job done, not to do the job for you.

PS - The plan is to add additional features, such as CPU recommendations, environment size goals, better sizing results based on existing CPU usage percentage, and some other ideas. If you have any feedback please shoot them my way.

*disclaimer* This document and tool are my own and does not represent anything from any other entity. I will not be held liable for anything bad that comes of it. SPEC is also its own entity and I take no credit for the amazing work they have done over the years.

Written by Eric Wamsley

Posted: Aug 4, 2018 1:08pm

Posted: Aug 4, 2018 1:08pm