Introduction

Should you do more cores or more sockets for your VMs in ESXi - that age old debate for virtual machines. Depending on which document you read there seems to be no consistency, even documents from the same vendor. For a long while it seemed the single-socket-just-add-cores-camp was in the lead. But then, the sockets-are-better-leave-cores-at-one-camp started to make headway. It seems now the "right answer" is somewhere in the middle or, depending on hypervisor, "doesn't matter" wins out. Who. To. Trust?!

In this post we will be evaluating the difference of sockets vs cores in a VMware ESXi 6.7 environment. Is it better to have a single socket with 4 cores? Or a VM with 2 sockets with 2 cores each, resulting in the same 4 vCPUs? Or what about 4 sockets with single cores each, is that the best? Or does the sockets vs cores debate not even matter? Do you add more cores? Do you add more sockets? Do you use minimal sockets? Do you match the virtual sockets to physical sockets? Do you never go over the cores per socket the host has for your ratio? Somebody tell me how to configure the sockets and cores for my dang virtual machine!

Contents:

- Introduction

- Testing Environment

- Testing Methodology

- Results - vCPU Baseline

- Results - 48 vCPU Configurations

- Results - 12 vCPU Configurations

- Results - Additional vCPU Configurations

- Additional Stats

- Conclusion

- Caveats

- What is Next?

- Further Reading

Testing Environment

We will have a single Windows 2019 Datacenter Edition version 1809 build 17763.107 guest OS running on a VM with different CPU configurations between sockets and cores. The Windows OS is a default install, with VMware Tools and the CPU testing tool installed only, 16GB of vRAM, and a 40GB VMDK. Hot add is disabled. (Bonus info - default Windows 2019 install consumes 14.3GB).

The ESXi host hardware comprises of a dual socket Intel Xeon E5-2650v4 2.20GHz 12 core CPUs with hyperthreading enabled and 256GB of RAM (128GB per socket). The VM's storage is running on direct attached SSD, spanned across 6 drives, but storage should have no impact on these tests as the entire app and results will fit in RAM. The ESXi host will only have the testing VM running on it so there will be no outside contention, except maybe the hypervisor itself in some situations. The ESXi host has no advanced configurations or other features enabled.

For workload generation, the world famous y-cruncher tool will be used. Leveraging the Windows binaries for version 0.7.8 build 9503. y-cruncher has many uses but the main one is it calculates the number pi to as many digits as you desire. It is the tool that has helped many people break world records for pi digit calculation! Y-cruncher has a performance testing mode which allows you to calculate a certain number of pi digits all in RAM so we can get as close to a compute stress test as possible. The calculation effort is both memory and CPU intensive and is known to be very efficient in parallelism. Plus, it goes full speed as soon as you hit go.

I do have to acknowledge that RAM speed has a part to play in the actual results, but I am not looking to break any speed records, just to compare the performance using the same hardware but with different virtual configs. CPU frequency also has an impact on how quickly results are calculated, but again we are not looking to break records just compare socket and core configurations for VMs.

Testing Methodology

The first round of tests will be with the VM configured at 1 socket and different cores per socket. For example, test one will be 1 socket with 1 core, resulting in 1vCPUs. Then 1 socket with 2 cores, 1 socket with 4 cores, etc etc all the way to 1 socket with 48 cores, resulting in 48 vCPUs. Remember this is the virtual hardware design, not the underlying physical hardware.

Then we will move to 2 sockets with various cores per socket, 4 sockets also with various cores per socket, then larger number of sockets with only a single core per socket - so 12 sockets with 1 core per socket, 24 sockets, etc.

When performing the actual test the steps will be: Configure the sockets & cores, power on the VM, login, wait 30 seconds, verify the socket & cores match in task manager, close task manager, run y-cruncher, record results, close y-cruncher, repeat y-cruncher test, power off VM, repeat entire process.

y-cruncher will be set to calculate 2.5 billion digits (2,500,000,000) of Pi for each run. Tests are repeated 5 times for each socket / cores-per-socket configuration (except on single and dual vCPU because it takes forever).

The 2.5billion digits consume roughly 11.9GB of RAM.

As I write this introduction I have no idea what the results will be... so lets get kickin' and see what happens!

Results - vCPU Baseline

Lets first take a look at the data by viewing results from a vCPU standpoint to get a baseline. The vCPU count is irrespective of Sockets and Cores-per-Socket. You may also call vCPU the total number of cores.

This first graph (above) shows us how long it took, in seconds, to calculate 2.5 billion digits of Pi based on how many vCPUs the VM has assigned. Faster is better. As y-cruncher can work with multiple threads the more cores the faster the results are computed. Unsurprisingly, 48 vCPUs is faster than any other configuration.

Here is a table view of the same data, the first column is the number of vCPUs and the second column is the compute time for 2.5B digits. I also added two more datapoints: the seconds per vCPU (equation is "Comp Time" / vCPU = result) and the number of seconds it took per 100,000 digits (equation is "Comp Time" / 2,500,000,000 = result).

If you compare the results for 24 cores with 26 and 32 you may see something surprising - 26 and 32 took longer to calculate than 24. If you noticed my physical setup is dual 12 core CPUs with hyperthreading enabled. Up through 24 vCPUs assigned y-cruncher is running all on the same physical CPU, even when there are 16-24 vCPU assigned to the VM, because ESXi takes advantage of hyper-threading.

Hyper-threading basically allows two compute threads to run on the same core, near simultaneously, so for ESXi a 12 core CPU with HT enabled actually "appears" as a 24 core CPU. When the VM has 26 or 32 cores assigned and y-cruncher is kicked off, part of the workload is executing on the second physical CPU and since the application needs to share data between the two sockets, there is an additional delay with the remote memory access happening in RAM. This is where "NUMA" comes in to play. (do a web search for more info and I may do a post on NUMA in the future).

The benefits of having more than 24 cores returns with 36 total vCPUs all the way up through 48 vCPU. Again these results show 48 cores provides the fastest results.

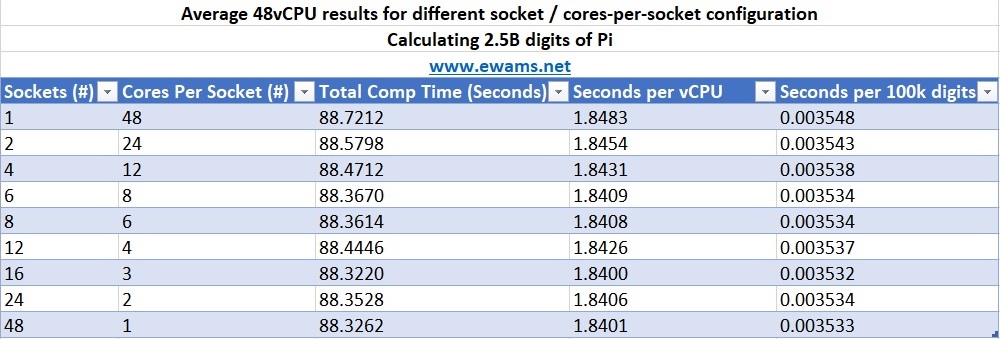

Results - 48 vCPU Configurations

Since 48vCPU was our fastest result, lets take a detailed look at the different 48 vCPU configurations. Remember we are comparing different virtual socket and virtual cores-per-socket configurations for virtual machines to see if there is one "better" than another.

Keep looking at that table. Because...well... I am sorry dear reader, but there is no statistical difference in any of the configurations. A single socket with 48 cores performs exactly the same as 16 sockets with 3 cores-per-socket. y-cruncher was ran 5 times for each configuration, and yes the table is averaged numbers, but feel free to download the CSV data to look yourself... there is no difference in the results based on socket and cores-per-socket configuration.

Maybe we are seeing these results because we are spreading the workload across 2 physical CPU sockets? Lets look at a different number of vCPUs.

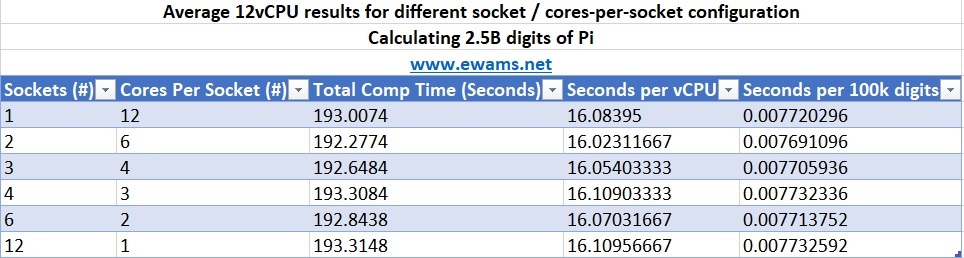

Results - 12 vCPU Configurations

12 vCPU is our next test. Since a 12vCPU VM, regardless of socket configuration, would all fit in a single NUMA node / physical CPU maybe we will see something different:

Nothing. Nadda. Zip. No difference. It is at this point dear reader that I was reminded of my bit.ly research from a decade ago. Where one simple mistake almost cost me a lot of time and money. But alas, after reviewing both the vSphere 6.7 and vSphere 6.5 performance guides for the 50th time in my career I realized the world we live in does not care about cores-per-socket. Maybe back in the pre-4.5 days (all hail ESX 3.1) it mattered but today it does not, at least not with these hardware and virtual CPU configurations.

Results - Additional vCPU Configurations

I ran the test on every configuration for 48vCPU, 32vCPU, 16vCPU. 12vCPU. 4vCPU, 2vCPU and of course 1vCPU and the results were the same. You can download all the results to look for yourself. For each of those vCPU counts, it did not change the compute time regardless of how many sockets or cores-per-socket the VM was configured with. I even purposely tested the 32vCPU fully since it was slower than the 24vCPU because of remote memory retrievals, but it did not have any changes in computation time either.

Additional Stats

Because I want to rub it in my own face:

| Stat | Value |

| Total Tests Performed | 368 |

| Total Calculation Time | 33657.581 seconds (~9.3 hours) |

| Total Digits Calculated | 428,000,000,000 (428 billion) |

| Fastest Time | 88.019 seconds (48 vCPU with 16 sockets, 3 cores-per-socket) |

| Slowest time | 2111.438 (1 vCPU with 1 socket, 1 cores-per-socket) |

| Total Configurations Tested | 33 |

Conclusion

Surprised to say, at least with this testing configuration, there appears to be no impact to virtual machine performance with different cores and socket configurations. Now NUMA might get you, depending on your application workload profile, so be careful. Y-cruncher obviously performs better the more vCPUs it has, but an 8-sockets-2-cores-per-socket system is going to perform the same as a VM with 4-sockets-4-cores-per-socket and the same as 1-socket-16-cores-per-socket since they all equal 16 vCPUs.

This may not have always been the case, especially 4.0 and earlier, but in ESXi 6.5 and newer it appears this is going to be the results. So have fun because apparently the answer is it does not matter how many virtual cores or virtual sockets you have, all that matters is your vCPU count.

Now this does not always mean more is better, you can definitely cause problems if you give all your VMs 24 or 48 cores. But at least now we know, from the VM standpoint, it does not matter if you configure more sockets or more cores. Feel free to download the csv and review the results data for yourself, as many different combinations were tested but all with the same results: no difference.

Caveats

Of course, "it depends" is going to be the correct answer for your environment. You may need more cores than sockets because of licensing. The application you are using. Or the operating system you are using only supports a certain number of cores per socket. The results could also be impacted by your physical CPU/memory configuration, if your application is multithreaded or not, or how truly busy each thread is. Things also change every time there is an update. VMware has made many updates and changes to their scheduler over the years, sometimes even in minor releases. At the time of writing ESXi 7.0 is out but this test is on 6.7, I have not read the release notes to see if there was an impact to the scheduler. What about Spectre/Meltdown mitigation? Or new CPU architectures? Hyperthreading? C-states and P-states? Even vs Odd cores and sockets? Is the application NUMA or vNUMA aware? The best answer would be to ask your hardware and software vendors. You can pay the same $200/hr as everyone else to let me talk to you about CPU Ready times.

What is Next?

- I would love to repeat this test on a new ARM system. (hint hint Marvell hook me up with that 96 core ThunderX3!)

- Ditto for AMD to keep it on x86.

- 4 Socket physical systems?

- What about large memory interactions - maybe do a Pi calc with 200-500GB?

- Maybe repeat the 12, 16, 24, and 48 core tests using HammerDB on MS SQL?

- How does multiple VMs compete for CPU resources (or sharing with no contention) impact results?

- Different hypervisors?

- NUMA / vNUMA?

Further Reading

- The "VMW Recommendation" everyone always refers to: https://blogs.vmware.com/performance/2017/03/virtual-machine-vcpu-and-vnuma-rightsizing-rules-of-thumb.html

- Great write-up on vSphere scheduler and NUMA/vNUMA: https://frankdenneman.nl/2016/12/12/decoupling-cores-per-socket-virtual-numa-topology-vsphere-6-5/

- MS SQL and NUMA: https://docs.microsoft.com/en-us/sql/database-engine/configure-windows/soft-numa-sql-server?redirectedfrom=MSDN&view=sql-server-ver15

- Not all hypervisors are the same, see OVM and "reduced performance" used all over the place: https://www.oracle.com/technetwork/server-storage/vm/ovm-performance-2995164.pdf

*Updates 4/20/2020 * - Added "additional vCPU Configurations" section as I originally left it out and received several emails suggestion I test other configs. Which I did do originally but my disappointment in the results left me flustered to the point I forgot to add it to this writeup.

*disclaimer* Your cores and your sockets are your problem. This document and presentation is my own and does not represent anything from any other entity. I will not be held liable for anything bad that comes of it.

Written by Eric Wamsley

Posted: April 17th, 2020 3:32pm

Posted: April 17th, 2020 3:32pm